GURU editors and NES Professor Olga Kuzmina decided to test ChatGPT's knowledge of economics. The results of the experiment are available here. And for those who would like to learn all the details, we have written the article below.

Ekaterina Sivyakova

Our "examination board" included NES Professor Olga Kuzmina, GURU journalist Ekaterina Sivyakova and editor-in-chief Philip Sterkin. We have compiled a set of tasks in English that consisted of four blocks to check:

- whether ChatGPT is able to analyze economic issues and find gaps in academic knowledge;

- whether ChatGPT can solve several economic tasks (by this we wanted to understand whether students can use it for such tasks);

- what forecasts ChatGPT can make;

- and whether it can give psychological advice.

We spent two hours communicating with ChatGPT (the experiment was conducted on March 29) in English, deliberately making mistakes in some statements in order to understand how artificial intelligence will read the general context of the conversation. Introducing ourselves, we said that we were talking on behalf of NES, and asked the chatbot to give clear and accurate answers, avoid unnecessary details and not give false information (spoiler: it still did lie to us). We asked AI to try on different roles: a professor of economics, a researcher, an economic journalist and even a tutor. Olga Kuzmina assessed the quality of the answers on economics.

The chatbot answered the questions from the first block very slowly, making many mistakes. It coped better and faster with solving tasks in microeconomics and managed to collect correct, albeit banal, tips for solving psychological issues.

It should be noted that several times during the experiment we had to start a new chat due to technical problems, the causes of which we do not know and which could be due to the network quality. This could affect how AI determined the context of the entire conversation. Several times the chatbot hung up and reported a technical error already while giving the response.

When asked which pronoun was preferable for it, the chatbot replied: "As an artificial intelligence language model, I don't have a gender identity, so you can refer to me using any pronoun you prefer."

Scholarly Laurels

We asked the chatbot to pretend that it was a research economist and analyze a database of academic research on the impact of women's representation in corporate boards on firm value and operations, as well as to briefly describe the gaps in this field of economics. The first version of the answer, which ended with a technical error, looked quite convincing – but it was only the impression.

Olga Kuzmina: "It referred to quite relevant and widely cited research, what’s more – having very telling titles containing all the mentioned keywords. However, the chatbot made mistakes in the description of each research.

For the study by Renée B. Adams and Daniel Ferreira (2009), the conclusion was exactly the opposite of the original: women’s representation on boards of directors has a negative effect on the firm performance (return on investment). In the description of the study by David A. Carter, Betty J. Simkins and W. Gary Simpson (2003), the chatbot was not mistaken with the effect, but confused the metrics. There was also an error in the description of the study by Jiekun Huang and Darren J. Kisgen (2013): it examines overconfidence, and not audit and monitoring committees, as the chatbot wrote.

The final research that was proposed by the chatbot – by Kenneth R. Ahern and Amy K. Dittmar (2012), is very widely cited and looks specifically at the impact of women holding management positions on a firm's value. And, contrary to the chatbot's "opinion", it does not talk about corporate social responsibility, much less about philanthropy and environmental protection."

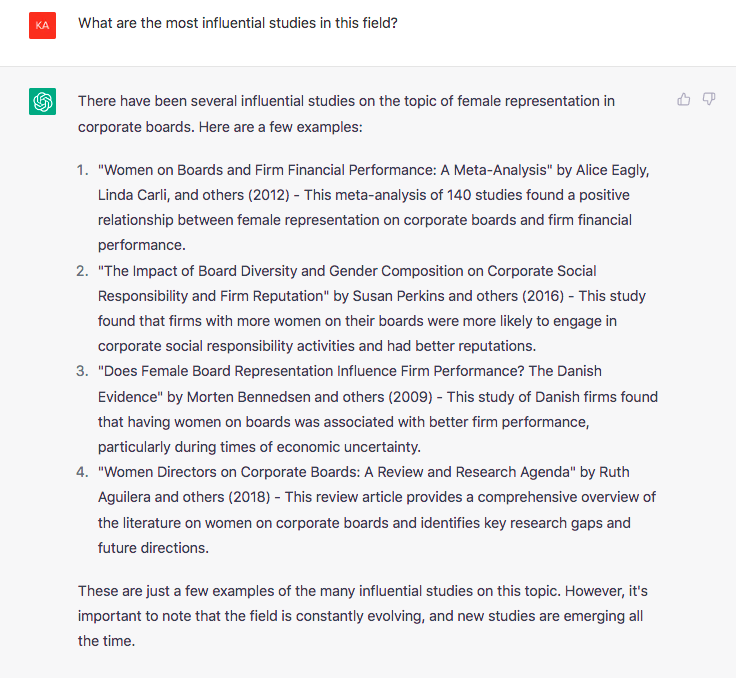

The chatbot began the second attempt to answer the question with the statement that empirical evidence suggests that having women on boards of directors is associated with positive outcomes for firms in terms of both value and operations. Next, it went on with the justification of this idea. In response to a question about the most influential studies in this area, the chatbot produced a list of four studies, noting that those were "a few examples." After being asked to indicate research that has been published in academic journals on finance, the chatbot returned an error. And checking the previous answer gave us a big surprise.

Screenshot of ChatGPT’s second answer about the most influential studies

The list immediately confused Olga Kuzmina: "Alice Eagly and Linda Carli study gender mainly from the point of view of leadership psychology, and not at all in terms of firms profitability. Susan Perkins looks at it from the point of view of leadership in countries, and she did not write anything about firms in general, or specifically about women on boards of directors. Morten Bennedsen was the only one on the list who deals with empirical corporate finance, but not the topic of women on boards of directors. Ruth Aguilera mostly wrote about boards of directors in the context of international business practices, and not about women representation."

Suspicions were confirmed: it turned out that studies with such titles exist, but they have other authors and were published at a different time than the one indicated by the chatbot.

- The first study "Women on Boards and Firm Financial Performance: A Meta-Analysis" was actually published in 2014 and written by Corinne Post and Kris Byron (at that time working at Lehigh University, USA).

- The second research on the impact of board diversity and gender composition on corporate social responsibility and firms' reputation was published in 2010 by Stephen E Bear (Fairleigh Dickinson University), Noushi Rahman (Pace University) and Corinne Post.

- The third study on whether female board representation influences firm performance was conducted by Professor Caspar Rose of the Copenhagen Business School (published in 2007).

- The fourth research on how gender diversity in corporate boards affects corporate governance, and those, in turn, firms' performance, was written by Siri Terjesen (Florida Atlantic University), Ruth H.V. Sealy (University of Exeter) and Val Singh (Cranfield University) and published in 2009.

About Turkey earthquake and chatbot errors

Next, we asked ChatGPT to act like an economic journalist and highlight the main ideas of the column by the European Bank for Reconstruction and Development experts on the consequences of the earthquake in Turkey and Syria in February 2023. One of its authors is Maxim Chupilkin, NES graduate and EBRD macroeconomic analyst. We included a direct web link to the column in our inquiry to the chatbot. And it did not cope with this task: the ChatGPT produced general discussions about how much Turkey had suffered from earthquakes, and "quoted" the authors' calls to take urgent measures. Meanwhile, the column does not mention this at all, being devoted to a model comparison of the impact of the earthquakes of 1999 and 2023 on the country's economy ("early estimates" are given for 2023) and is accompanied by data on other countries.

About options in simple language

In the next task, we wanted to test the chatbot's ability to explain complex economic concepts in simple words. We invited it to become a professor of economics who explains to high school students the Black-Scholes Option Pricing Model.

"The answer was quite accurate, but it would hardly suit a high school student because of too many terms," Olga Kuzmina notes.

Then we changed the task, asking the chatbot to explain the Black-Scholes formula to a 10-year-old kid. At first, artificial intelligence used a creative approach and an analogy with buying a toy, i.e. a concept that would be clear to a child, but the answer was not correct enough.

"The idea of making a comparison with a toy is interesting, but there were mistakes, for example, implicit equating of stocks and their options," professor Kuzmina explains.

Could ChatGPT have helped Olga Kuzmina?

The experiment continued. We gave the chatbot a link to Olga Kuzmina's research "Gender Diversity in Corporate Boards: Evidence From Quota-Implied Discontinuities," and asked to write a new abstract for it. AI did not cope with the task.

"The abstract has nothing to do with the research, which has nothing about corporate social responsibility that the chatbot writes about," professor Kuzmina stated, suggesting that, apparently, the words in the title are consonant (statistically close) to the problems of gender diversity.

After that we changed the task and asked artificial intelligence to act as if it was the author of that article and write an abstract with the main findings. This time we didn't give it a web link to the research, hoping that the chatbot would understand that we were talking about the same study. This attempt ended in complete failure: ChatGPT stated that the work examines the effect of microplastics on aquatic ecosystems, and described the allegedly conducted laboratory experiments and their results.

We repeated the same inquiry with a web link to Olga Kuzmina's study, but the chatbot returned to the first incorrect version about corporate social responsibility.

And could it help NES PR manager?

We suggested that the chatbot change its profession again and act as a NES PR manager who needs to analyze comments to a research by NES Professor Sultan Mehmood about how Ramadan fasting affects the quality of decision-making of judges in Pakistan and India. We asked AI to collect quotes and group them.

ChatGPT sorted comments into academic citations – positive and critical, media and public comments. However, the answer of the chatbot did not contain the authors’ and outlets’ names. And, unfortunately, it also ended with a technical error.

Solving economic tasks

In this part of the experiment, we asked ChatGPT to solve several economic tasks that are given to students. Believe it or not, artificial intelligence has coped with them relatively well.

The first task from Olga Kuzmina was formulated as follows: estimate the Fama-French 3-factor model for Microsoft stock returns (it takes into account market risks, as well as those related to the size and value (undervaluation) of companies), using all available historical data for Microsoft returns from Finance.Yahoo.com, and the factor data from Ken French’s website. Based on this analysis, ChatGPT should have concluded whether Microsoft is a growth or a value stock (a fast-growing, often technological company or a stable and strong company, respectively).

Olga Kuzmina: "In general, all the milk-and-water in the answer was collected well, which was expected, since it is probably the most well-known model for evaluating profitability. But the very answer about determining a value or growth stock was incorrect. And there was no analysis either, although formally all the data is available."

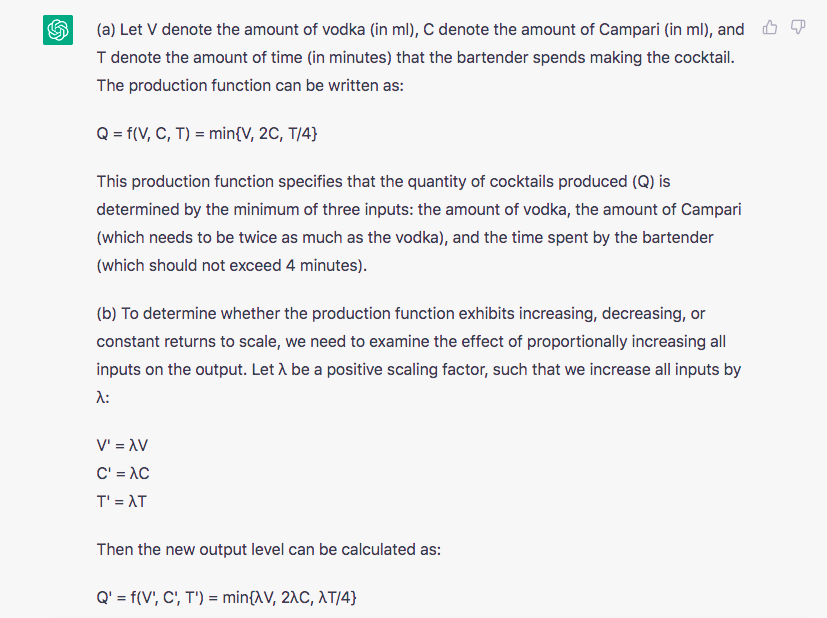

The description of the second task was the following:

Solve the following microeconomics 101 problem. At Shindler's you can buy a cocktail made with one part Vodka, two parts Campari and 4 minutes of the bartender's time.

(a) Write down the production function (assume continuous inputs and output).

(b) Answer the question: does this production function exhibit increasing, decreasing or constant returns to scale?

Screenshot of the solution of the second task

Olga Kuzmina: "This is not bad, there is one minor mistake (2C instead of C/2), but otherwise the conclusions are correct. This is an example of a typical economic task."

The model predicts the future

In this part of the experiment, we asked the chatbot to give a forecast, acting as an economist. We asked it what "black swans" were awaited in the global economy. Instead, it explained the concept of "black swans" and how they can affect the economy. The only example that the AI gave us was the COVID-19 pandemic.

The next question we asked was whether humanity can overcome economic inequality. The chatbot replied that it is possible, but specified that it will require a sustained and concerted effort by policymakers, businesses, and individuals. The recipe for achieving economic equality, in AI’s opinion, was the following: the progressive taxation, the development of the social security system, investments in education and training, support for small and medium-sized enterprises. Individuals, it said, can contribute by "advocating for policies that promote social and economic equality, supporting businesses that promote fair labor practices and social responsibility, and participating in community development programs that promote economic growth and opportunity."

We asked the chatbot to provide facts in support of its position, and it produced five, referring to information from Oxfam, the OECD, the IMF, Pew Research Center, as well as the Harvard Business Review magazine. Impressed by such a list, we asked the chatbot to give direct links to the mentioned documents. It immediately produced a list of links that looked quite plausible, but turned out to redirect to non-existent pages. The links did refer to the websites of the mentioned organizations, but when we clicked through, a message appeared that there was no such page.

Screenshot of the page to which the link from ChatGPT under the title "Pew Research Center Survey of Public Opinion on Economic Inequality" redirected

However, later it turned out that all the documents and data referred to by the chatbot exist, but are located on other pages:

- here is Oxfam data proving that during the pandemic, 10 billionaires doubled their fortune, while the incomes of millions of people were falling;

- OECD report showing that investment in education can reduce inequality;

- the text "Reduce Inequality to Create Opportunities" by IMF Managing Director Kristalina Georgieva in the IMF Blog, which was probably meant by the chatbot;

- a study by Harvard University Professor George Serafeim on how firms’ socially oriented efforts can create real value, published in the Harvard Business Review;

- a Pew Research survey of Americans on inequality.

All these texts date back to 2020-2021.

We asked ChatGPT to become an economist again and tell us when gender gaps will be closed in the economy. The answer began with the same remark as the previous one (about sustained and concerted effort), and then the chatbot referred to the World Economic Forum, which estimates that it will take 135.6 years for the gender gap in wages in the world to close given its current trajectory. The factcheck showed that the figure was correct. The rest of the answer was a list of actions to bridge gender gaps. Typologically, they were very similar to the proposed actions to eliminate economic inequality: according to the chatbot, efforts of governments, businesses and individuals are also needed to reduce gender gaps.

Again, we asked ChatGPT to provide facts in support of its position. The chatbot offered six, and this time accompanied them with inactive links to the original sources.

“How many hours a day will people work in 10 years?” – was our next inquiry. Finding it difficult to predict it "with certainty", the chatbot referred to "many factors" affecting the number of working hours, and listed three of them:

- automation of routine tasks with the help of robotics and AI technologies can reduce the demand for certain types of labor and at the same time create new jobs;

- demographic shifts: as the population ages, the number of jobs may decrease;

- social norms: in recent years, people have begun to pay more attention to the work-life balance and look for a more flexible work schedule, which may lead to a shorter working week or a more flexible work schedule. The chatbot concluded: "It is likely that the number of hours worked will continue to evolve."

We decided to clarify whether the aging population would rather reduce or increase the number of working hours in 10 years? The chatbot repeated the arguments from the previous answer: as the population ages, the number of working people may decrease, which, in turn, may lead to higher wages and reduced availability of certain types of jobs. At the same time, it clarified that older workers may prefer to work less or retire earlier; but then gave a counterargument: "As the number of older adults increases, there could be greater demand for healthcare services and other industries that serve older populations, creating new jobs and potentially increasing the number of hours worked in these sectors."

Screenshot of the answer to the question about demographic shifts (we intentionally made a speech error in the wording of the question)

Conversation for the benefit of students

Next, we asked the chatbot to take on the role of an academic advisor and give five recommendations on how Ph.D. students in economics can help themselves overcome the "depression of the third year" (an informal name for the period when "courses end and you need to come up with something new yourself, which is very difficult," as NES Visiting Professor, ICREA Research Professor at Universitat Pompeu Fabra Ruben Enikolopov explained).

ChatGPT suggested:

- seek support from peers – fellow Ph.D. students or recent graduates;

- ask for advice from the academic advisor;

- take a break and relax;

- seek out university mental health services that offer counseling or therapy;

- revisit your goals and motivations.

AI advice: "Take some time to reflect on your reasons for pursuing a Ph.D. in economics. Reconnecting with your original motivations and goals can help you regain your sense of purpose and drive during this challenging time. Consider setting new goals or revising your existing ones to better align with your interests and passions."

After that, we asked the chatbot to suggest how economics students can cope with burnout, and make a list of 10 open educational resources where one can get useful and scientifically proven information on this topic. ChatGPT produced the following: The American Psychological Association's Help Center, burnout checklist from the Mayo Clinic, Harvard Health Publishing department of Harvard Medical School, Mindful.org website, Ted Talks lectures, Coursera Learning Platform, World Health Organization, The National Institute of Mental Health (USA), The Anxiety and Depression Association of America, and The International Stress Management Association (UK).

Finally, we asked ChatGPT to act like an experienced economist and answer the question of how economists cope with impostor syndrome. The chatbot advised to recognize that many economists experience this problem, make a list of own strengths and accomplishments, seek support from colleagues and mentors, keep learning and developing skills (including attending conferences, reading academic journals and looking for new challenges, taking care of physical and mental health), get enough sleep and exercise regularly, and seek professional help, for example, therapeutic.

Back to the beginning

At the end of the experiment, we decided to return to the first set of questions and again ask the chatbot to analyze economic research in the hope that our conversation had benefited (taught) it so that it would be able to give a better answer. However, AI did not meet our expectations.

The task was to answer the question about the benefits that the research by NES President Shlomo Weber and his co-authors could bring to society. The chatbot wrote that this study shows how in the United States the car drivers’ race influences the decisions of police officers to search them, so the research can help in discussions about police reform and racial justice. ChatGPT's conclusion had nothing to do with the actual research, which, in fact, analyzes strategies for immigrants to learn the language spoken by the majority of the country of their destination. Perhaps the error was due to the fact that the question contained a link to a pdf file.

Next, we asked the chatbot to act like an economics professor, analyze and describe the practical value of the research of NES Professor Marta Troya-Martinez, as well as to give an example of how the findings of this research can help businesses. ChatGPT stated that the study contributes to the field of economics by analyzing the impact of automation on the labor market, for example, it can help firms develop strategies for retraining and upskilling workers whose jobs are at risk of automation. This information does not correspond to the text of the research: in fact, it is a study of relational contracts (they are based on the trusting relationship of the parties, and the study elaborates on the theory of managed relational contracts). It should be noted that the question also contained a direct link to a pdf document on Dropbox.

When asked to describe empirical methods for assessing the causal relationship between vaccination and mortality from COVID-19, ChatGPT produced an error.

In conclusion, we decided to exclude the factor of incorrect reading of links. We uploaded the full text of the introduction (about 2000 words) from the study by Olga Kuzmina that was already discussed, and asked the chatbot first to describe it in three paragraphs, and then rewrite them again in one paragraph.

Screenshot of ChatGPT's final answer to the inquiry to briefly describe the introduction to Olga Kuzmina's study

Olga Kuzmina called the three-paragraph version "not a bad one": "The sentences from the long text were taken quite organically, but the main findings of the research were described in a superficial manner." The short version again contained an error: the penultimate sentence did not correspond to reality.

Final assessment by NES Professor

Olga Kuzmina: "The chatbot gives good general answers when waffling, but since it distorts facts almost all the time, I would even be rather wary of its waffling as well. For example, in the middle of an apparently reasonable text, completely illogical conclusions or distortion of the basics may occur, for which a student would immediately receive an ‘F’. Perhaps, with more accurate sequential queries, ChatGPT would help save time, but in any case, a person who understands the topic will be needed to check the text written by AI. This is not surprising overall, because even people can't always "read the Internet" and distinguish well between scientific facts and fiction. Even more challenging are the issues on which researchers themselves do not always agree with each other… As for problem solving, I think many professors already use ChatGPT to check their tasks for "commonality".